MODEL IDENTIFICATION, SIMULATION AND SYSTEMS OPTIMIZATION

|

Description |

Methodology overview. With a raw dataset, we select the minimal set of features used to build the model. This model is simulated and optimized to finally perform a hardware implementation of the final resultant model.

The GreenDISC group belongs to the Department of Computer Architecture and Automation at Complutense University of Madrid, and has developed new Modeling and Simulation (M&S) techniques. These techniques are based on Model Identification, Multi-Variable Optimization, Complex Simulation Tools and Features and Knowledge Extraction from Complex and Heterogeneous Data Sources. In many cases, the models derived for a wide range of practical problems have been integrated into a DEVS simulation framework (DEVS is the acronym used for the Discrete EVent Systems specification formalism). The obtained models have been also optimized using, among other techniques, Machine Learning and Evolutionary Computation.

Our research is focused on practical problems where a global modeling and optimization strategy can be especially useful. These problems present a high complexity, and cannot be easily tackled using classic modeling, simulation, and optimization techniques. They thus demand a heterogeneous and multi-level approach. For this purpose, our work starts with a precise knowledge of the problem, developing techniques and methodologies that begin with data acquisition in real environments, and observing the practical constraints of the given scenario.

In particular, our techniques have already been successfully applied in cases of use related to the field of Health Sciences (ambulatory and Wireless monitoring for prediction of symptomatic crisis), and Computer Engineering (modeling of data center energy consumption).

|

How does it work |

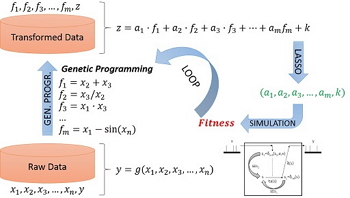

Firstly, it is required to have a representative data set of the system for which the model will be defined. Next, using Genetic Programming Techniques and in combination with classic regression algorithms like LARS (Least Angle Regression) or LASSO (Least Absolute Shrinkage and Selection Operator) and Machine Learning, we obtain: (1) the set of features or input variables that are representative for the model and (2) the model itself, which is a function of these features.

Acquisition of the dataset needed for a model definition may require the deployment of a wireless sensor network, ambulatory (remote) monitoring of physiological parameters and activity, or the definition of sampling techniques in hardware/software sensors. In these fields, our research group is working on the development of such techniques, as well as in the implementation of the hardware equipment required by the signal acquisition process, and we are also working on the decision of the required signal processing algorithms according to the characteristics of the monitored variables.

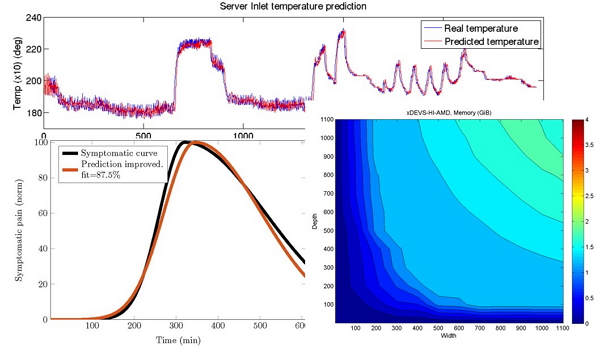

Examples of models obtained in real cases. From up to down: (1) predictive model of a server inlet temperature, (2- left) predictive model for migraine crisis (2-right) model elaborated to evaluate the performance of several simulators.

Acquisition of the dataset needed for a model definition may require the deployment of a wireless sensor network, ambulatory (remote) monitoring of physiological parameters and activity, or the definition of sampling techniques in hardware/software sensors. In these fields, our research group is working on the development of such techniques, as well as in the implementation of the hardware equipment required by the signal acquisition process, and we are also working on the decision of the required signal processing algorithms according to the characteristics of the monitored variables.

Often, the provided model or even the modeled system require an optimization cycle. Minimization of health risks, energy, cost, as well as maximization of performance, are some examples. A varied set of optimization techniques are applied for this purpose: MILP (Mixed Integer Linear Programming), Simulated Annealing, Genetic Algorithms, Particle Swarm Optimization, Genetic Programming, Multi-Objective Optimization, etc. In some cases, we target a more robust system including some adaptive features into the model.

Finally, the system is implemented in hardware, if needed. This implementation is performed on a step-by-step basis. We first include hardware prototypes to the software model. Then, in a Hardware/Software co-simulation environment, the target system is verified and validated using all the previous procedure but, this time, in the real and on-line situations.

|

Advantages |

Specific view of our methodology. Feature selection phase is tackled with genetic programming and regressive algorithms. With modeling and simulation, we can repeatedly try to improve the obtained model and at the same time optimize all the parameters of the real system.

This particular vision and new techniques applied to model identification allows us to derive new models of a system that were completely unknown previously. Besides, this method permits the joint use of knowledge-based techniques, which facilitate the comprehension and adaptability of these models to the final user needs.

M&S scalability is not a big issue in our case, because using the DEVS formalism, model and simulator are completely independent. Thus, parallelization and distribution of simulations is an orthogonal process to the model implementation.

Finally, this agnostic model generation (without previous knowledge of the problem) allows us to distinguish and to select which magnitudes are those that really affect the dynamics of our system, providing a wide knowledge about the tackled problem. This matter is not achievable using classic techniques due to the big quantity of variables usually managed.

|

Where has it been developed |

Our research group of the Department of Computer Architecture and Automation, has developed several approaches about patient modeling with neurological diseases, and about symptomatic crisis prediction in both Migraine and Strokes in conjunction with the Hospital “Hospital Universitario de la Princesa”, in Madrid.

We have also applied Feature Engineering, in collaboration with the Supercomputing and Visualization Center of Madrid (CeSViMa,), with the aim of obtaining energy consumption prediction models of data centers, including thermal effects produced by the computational services and the refrigeration systems installed inside data center’s rooms.

In all the studies performed, our team has developed the deployment of wireless sensor networks for ambulatory continuous monitoring of several descriptive variables. On the same way, we have designed and implemented monitoring wireless nodes, always when the commercial solutions did not offer a proper solution. The implementation of these nodes was developed in collaboration with the companies related to this sector.

With respect to the DEVS modeling and simulation, our research group works in collaboration with ACIMS (Arizona Center for Integrative Modeling and Simulation.. at the University of Arizona. Our research group has several simulators developed with the collaboration of DUNIP Technologies.

Regarding optimization, our group has experience in a broad range of numerical and no numerical optimization methods and tools, with emphasis in Evolutionary Computation methods.

In the academic field, our students also contribute to the advance of these areas with several bachelor and master projects, and doctoral theses, all of them oriented to these areas of expertise.

|

And also |

The GreenDisc group of the Department of Computer Architecture and Automation offers its participation on:

- Collaboration on research projects related to feature engineering, bioengineering, energy efficiency, simulation, optimization and hardware design and implementation.

- Development of ad-hoc tools to the implementation of models and their corresponding simulation

- Formation in feature engineering, discrete event simulation, optimization and hardware design and implementation.

|

Contact |

|

© Office for the Transfer of Research Results – UCM |

|

PDF Downloads |

|

Classification |

|

Responsible Researchers |

José Luis Risco Martín: jlrisco@ucm.es

José Luis Ayala Rodrigo: jayala@ucm.es

Department: Computer architecture and Automation

Faculty: Computer Sciences